About Me

Hi, I'm Nikhil, a Research Scientist at Google DeepMind (GDM)! My interests span a broad range of topics including efficient multimodal learning, active learning, self-supervised representation learning, visual neuroscience/perception, and AI alignment with human behavior. I completed my PhD at New York University and the Flatiron Center for Computational Neuroscience under the supervision of Prof. Eero Simoncelli- my research focused on developing computational models of vision that better align with primate visual cortex and human perception. I also have BS and MS degrees from Stanford University where I studied a wide-range of things including signal processing, machine learning, numerical methods, optimization, and computational biology.

In my free-time I'm an avid tennis player, skiier, rock climber, and classical guitarist.

Updates & Timeline

Selected Publications

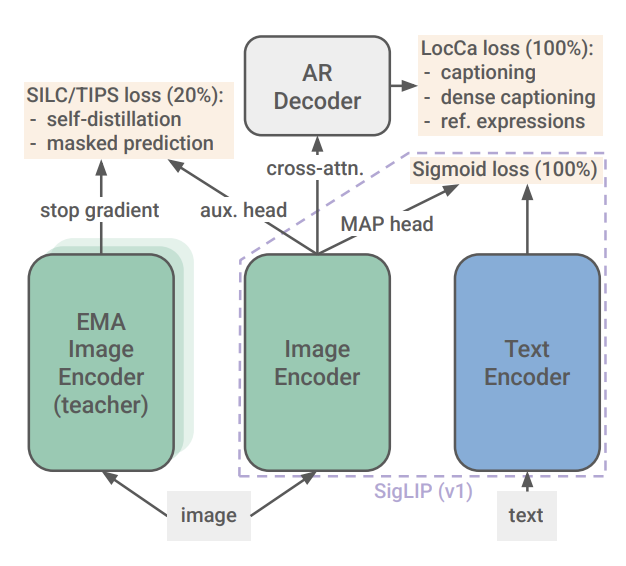

M. Tschannen*, A. Gritsenko*, X. Wang*, M. F. Naeem*, I. Alabdulmohsin*, N. Parthasarathy*, T. Evans*, L. Beyer*, Y. Xia, B. Mustafa, O. Hénaff, J. Harmsen, A. Steiner, and X. Zhai*, "Siglip 2: Multilingual vision-language encoders with improved semantic understanding, localization, and dense features," Technical Report, arXiv preprint arXiv:2502.14786, 2025. [Paper]

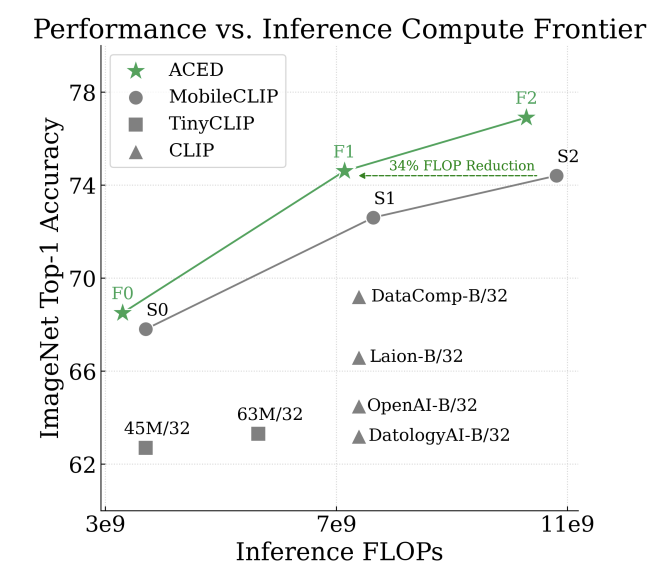

V. Udandarao*, N. Parthasarathy*, M. F. Naeem, T. Evans, S. Albanie, F. Tombari, Y. Xian, A. Tonioni, and O. J. Hénaff, "Active data curation effectively distills large-scale multimodal models," IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025. [Paper]

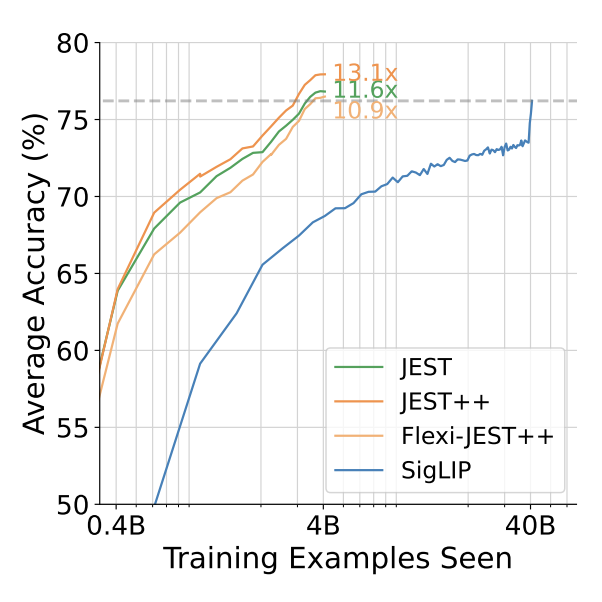

T. Evans, N. Parthasarathy*, H. Merzic, and O. J. Henaff*, "Data curation via joint example selection further accelerates multimodal learning," Advances in Neural Information Processing Systems, Track on Datasets and Benchmarks, Spotlight Award, 2024. [Paper]

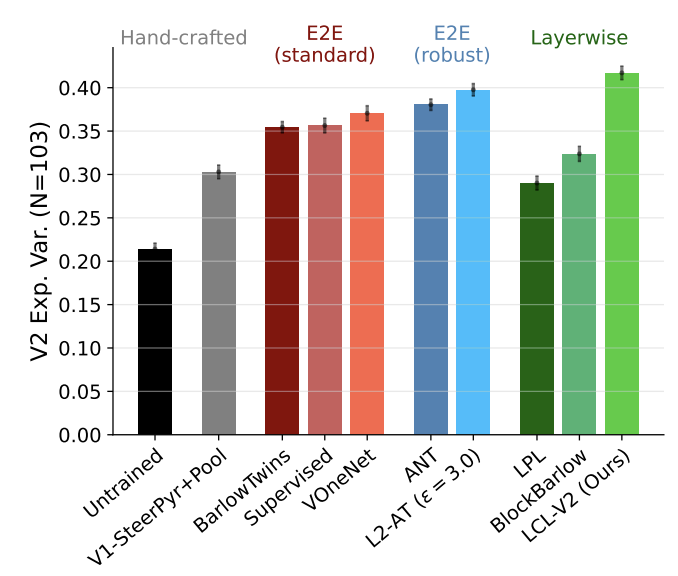

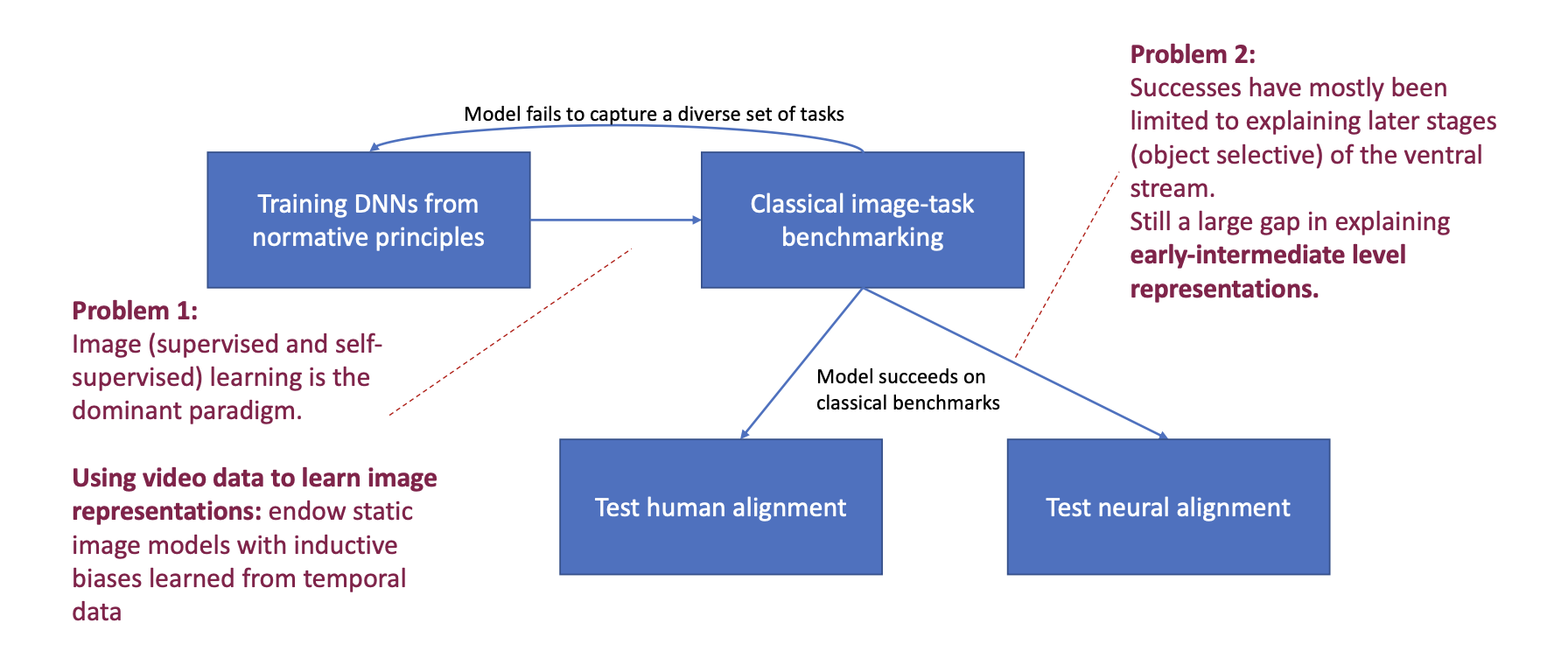

N. Parthasarathy*, O. J. Hénaff, and E. P. Simoncelli, "Layerwise complexity-matched learning yields an improved model of cortical area v2," Transactions on Machine Learning Research (TMLR), Outstanding Certification, 2024. [Paper]

N. Parthasarathy, "Towards aligning artificial and biological vision systems with self-supervised representation learning," Ph.D. dissertation, New York University, 2024. [Thesis]

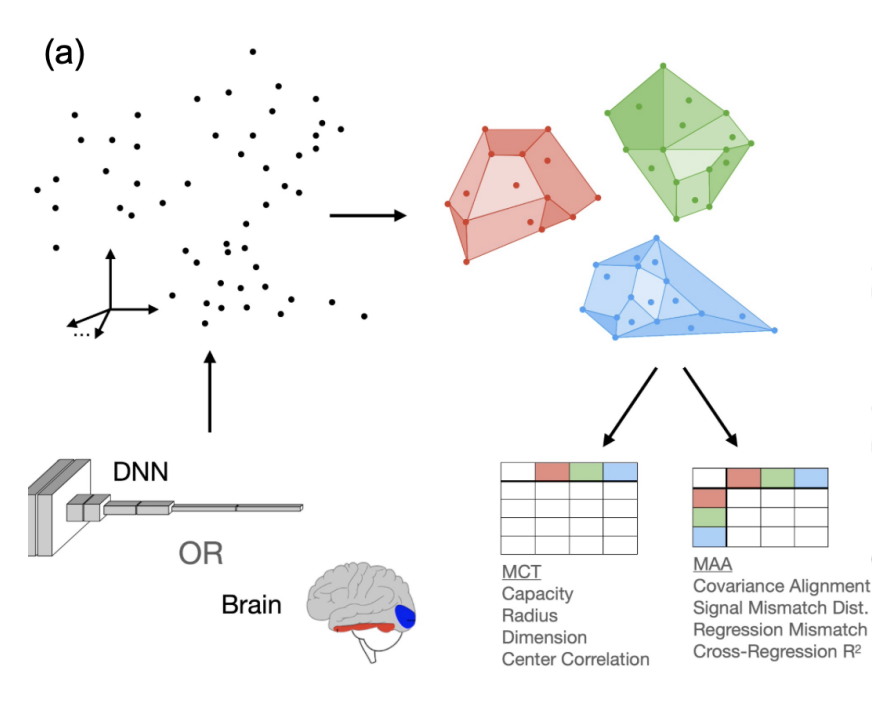

M. Kuoch*, C.-N. Chou*, N. Parthasarathy, J. Dapello, J. J. DiCarlo, H. Sompolinsky, and S. Chung, "Probing biological and artificial neural networks with task-dependent neural manifolds," Conference on Parsimony and Learning, pp. 395-418, 2024. [Paper]

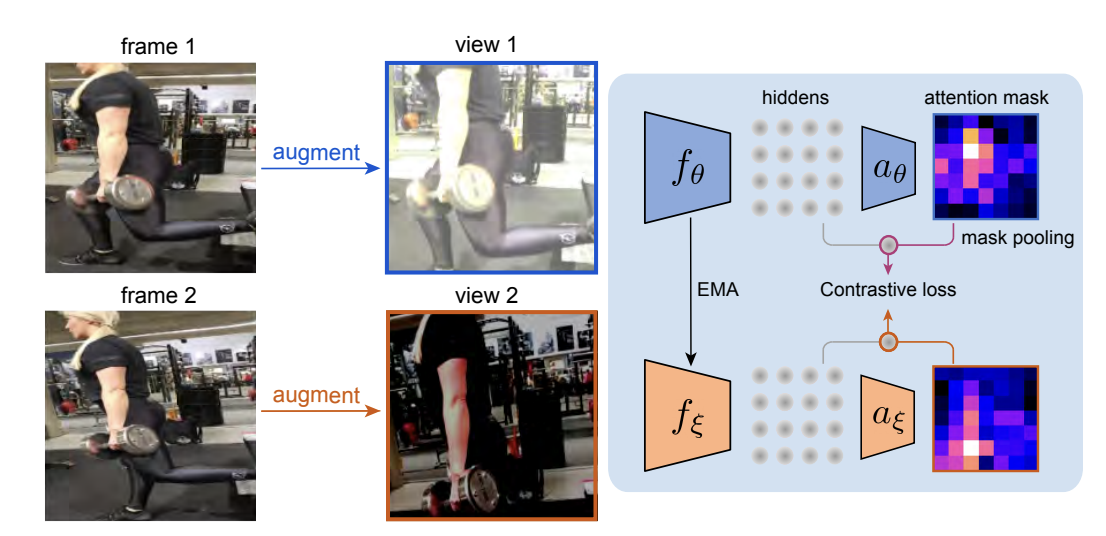

N. Parthasarathy*, S. Eslami, J. Carreira, and O. Hénaff, "Self-supervised video pretraining yields robust and more human-aligned visual representations," Advances in Neural Information Processing Systems, vol. 36, pp. 65 743-65 765, 2023. [Paper]

I. Balazevic*, D. Steiner*, N. Parthasarathy, R. Arandjelović, and O. Henaff*, "Towards in-context scene understanding," Advances in Neural Information Processing Systems, Spotlight Award, vol. 36, pp. 63 758-63 778, 2023. [Paper]

For a full list of publications, please refer to my Google Scholar profile.